AI MVPs vs MVPs

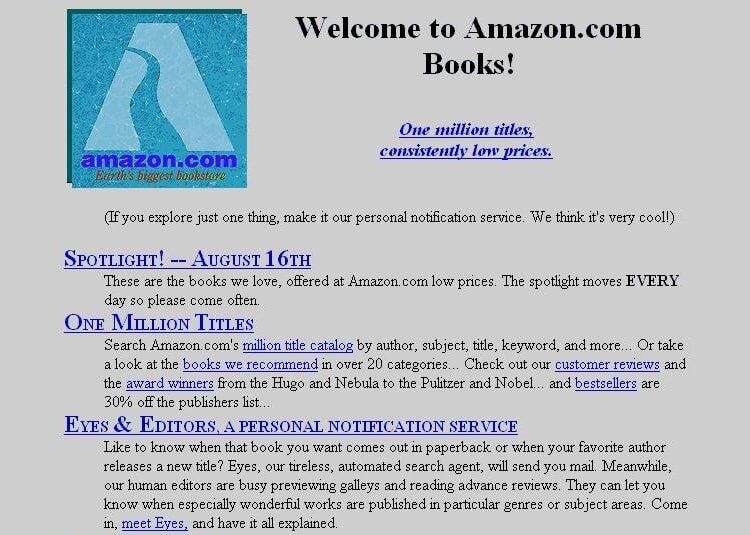

I wrote about MVPs about a month ago. Rome (or rather, Amazon) wasn't build in a day. Just taught my AI bootcamp about AI MVPs and we looked at what Amazon was like when it first started. It was all about books! I showed them their MVP. It is hard to imagine companies like Amazon starting off from an MVP, but here it is.

The reason I find this exciting is because they had this neat feature called "Spotlight! These are the books we love." And it changed every single day.

Before the AI-recommendations era, the 'Spotlight' just gave you a random book pick. So, if you had bought a bunch of Harry Potter books, you might still end up seeing a totally different genre highlighted next time.

Funny enough, I kind of miss the surprise of not knowing what's coming next.

Facebook, on the other hand had no smart newsfeed algorithms, friend suggestions or ads. Facebook would just allow you to login, edit your profile, maybe on its more advanced form request/accept friendships and post static text on your wall.

AI MVP vs MVP?

Just like for any other product, you should aim to deliver immediate value to its target users from the very first day. The concept of an MVP revolves around introducing a product to the market as quickly as possible, with the minimum set of features required to make it functional and valuable.

What experience is good enough ?

Things are a bit tricky with AI MVPs because the AI quality is really not going to be good on day 1. So you need to figure out what is the minimum ‘experience’ quality (MVQ) bar that you would be able to tolerate for your users.

I’m putting together the AI lifecycle, and at the end of it I state we should reiterate until we meet that minimum quality we’re after.

Do I need a fully functional AI model?

No. You just need an experience. The experience can be rough around the edges, partially-hard coded, but it should definitely include ‘some’ AI in it and definitely demonstrate potential for the future as well as a clear proof as to why AI is crucial for this product.

Wizard of Oz testing, where humans simulate AI responses, is a method startups sometimes use to prototype AI experiences without a fully functional model.

What does an AI MVP look like?

Content Recommendation System: An MVP might only consider user preferences based on a single category (e.g., genre for movies) rather than a more sophisticated model that takes into account user behavior, feedback, and content metadata.

Image Recognition App: An MVP could identify only a limited set of objects (e.g., plants, cars, animals) with a moderate accuracy, leaving more intricate identification and higher accuracy for later versions.

Voice Assistant: The MVP could handle basic tasks like setting reminders or playing music but might struggle with context-aware conversations or more complicated queries.

Predictive Maintenance: In the case of predicting machinery failures, an MVP might only consider a few data sources, like temperature and operating time, rather than integrating a more comprehensive set of sensor data.

Self-driving car: Another example could be a self-driving car tech MVP which only operates in specific conditions, like clear weather during the day, rather than handling all possible scenarios.

Developing an AI MVP is all about balancing quality, scope, and user experience. The goal is to showcase the potential of the AI while providing enough value to gather user feedback and improve. As with any MVP, rapid iteration based on feedback and data is crucial.